Bot traffic on the web has exploded in the last years. In this post you will learn all details about bot traffic and what you should do to minimize the negative impact on your data.

I was inspired to write this article after a remark of one of my clients:

“I was meeting with a person from Akamai and she indicated that the majority of our site traffic is likely bots, not people.”

Happily, in all my Google Analytics Audits so far I didn’t encounter anything like that.

Sit back and get ready to learn all about bot traffic and practical ways to deal with it.

Table of Contents

- Bot Traffic Trend

- How to Recognize Bot Traffic

- Large vs Small Websites

- How to Not Deal with Bot Traffic

- How to Deal with Bot Traffic

- Measurement Protocol

- Concluding Thoughts

Bot Traffic Trend

Distil Networks Bad Bot Report (2018) indicates that bad bots went mainstream.

Mailicious bot traffic varies per industry, but often it’s in the range of 20 to 50+ percentage of all traffic (!)

“Hey Paul, but you just said you didn’t encounter these bot traffic numbers in Google Analytics so far?”

That’s right, you need to understand that not all bot traffic (potentially) shows up in Google Analytics. Just a very small percentage of it.

However, for security and other reasons you should be aware that bots can definitely do harm to your site. Hurting your Analytics stats is just one small part of the bigger issue.

Bad bots include, but are not limited to:

- DDoS.

- Site Scraping.

- Comment Spam.

- SEO Spam.

- Fraud.

In the rest of the article we’ll focus on the impact of bots on Google Analytics.

How to Recognize Bot Traffic

You need to know how you can recognize bot traffic before you can properly deal with it.

I have found that in 95%+ of the cases you do good if you monitor two things:

- Traffic (sessions) on hostname.

- High bounce rate on ISP dimension.

1. Traffic on Main Hostname

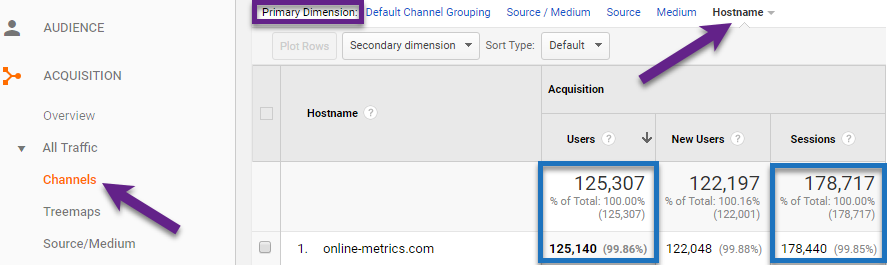

Step 1: Navigate to Acquisition > All Traffic > Channels.

Step 2: Change primary dimension to Hostname (or add secondary dimension).

- You want to see here a percentage of (near) 100% on your main website domains (that are part of your implementation).

- (not set) and other not recognized domains are an indication of bot and/or spam traffic.

2. ISPs with High Bounce Rate

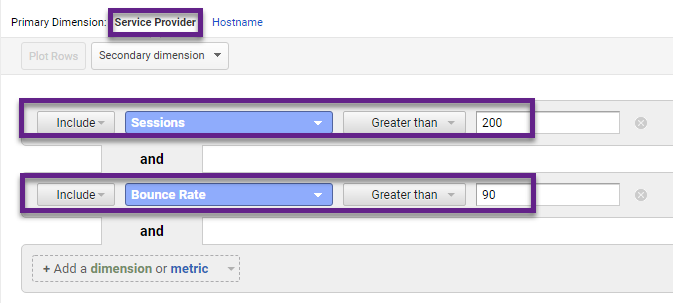

Step 1: Navigate to Audience > Technology > Network.

Step 2: Add a table filter on ISPs with substantial amount of sessions (e.g. 200) and bounce rate higher than 90%.

Step 3: Review report data.

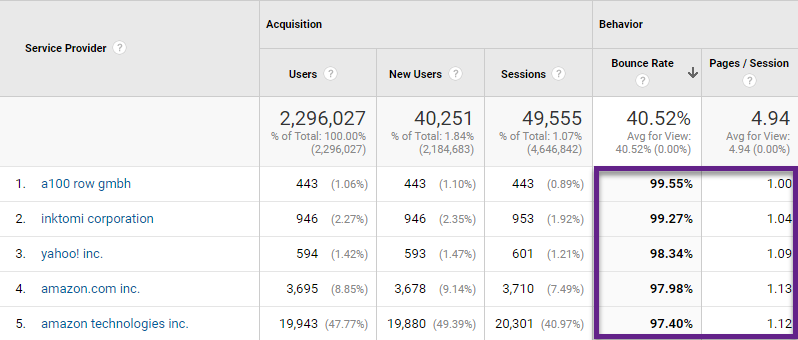

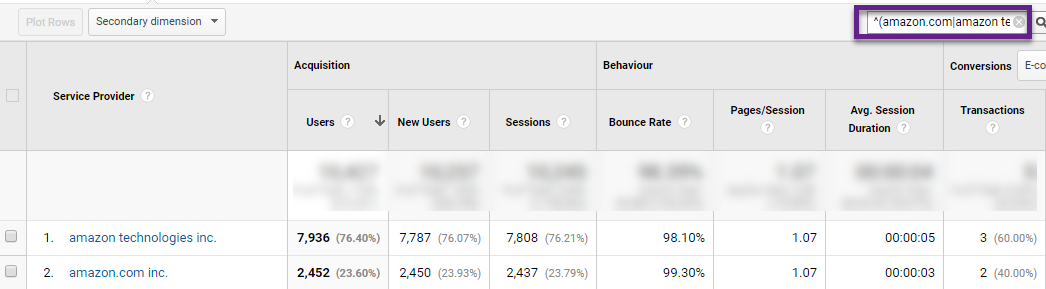

As you can see, Google Analytics hasn’t yet blocked Amazon’s AWS bots.

You will find that this proportion of “traffic” often comes from the city “Ashburn”. Ashburn is where one of the biggest data centers is located.

The proportion of bot traffic is rather low in this case so it won’t have a big impact on overall numbers and data-driven business decisions.

This is a good start to spot potential bot traffic issues in Google Analytics. Additionally, you could look into “source/medium” combinations, but I have found the two strategies above to be more effective.

Recommended resources:

- How to Quickly Discover and Solve (Not Set) Issues in Google Analytics (includes Service Provider dimension).

- Google Analytics API: The Non-Technical Guide (if you want to query this data outside of GA).

Large vs Small Websites

Here is the thing, you should always take the impact of bot traffic seriously no matter whether you are on a small or large website.

Based on my experience, I can say:

- The impact on data quality on smaller websites (< 10k sessions/month) can be relatively high. This due to the relative proportion of bot traffic on these sites.

- Although in general bot traffic in Google Analytics grows when overall traffic grows, it grows at a slower pace.

Note: malicious bot traffic – not measured in GA – is often a bigger threat for relatively high-traffic websites.

How to Not Deal with Bot Traffic

Let’s first have a quick chat on how you should not deal with bot traffic in Google Analytics.

In the past I have come across many articles that advice to apply an exclude filter at the “hostname” and/or “source/medium” dimension.

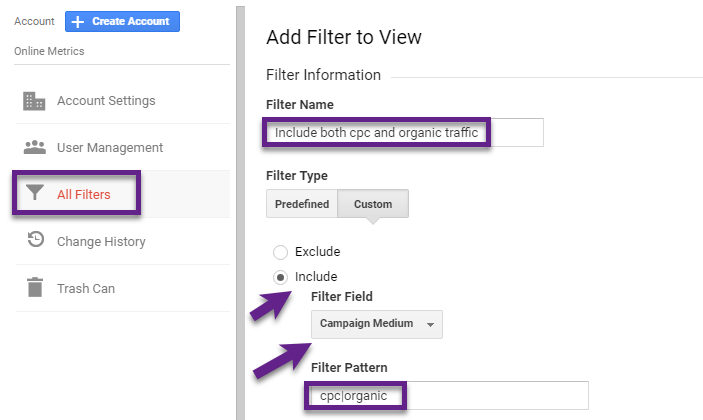

A confusing, but very important topic in relation to include and exclude Google Analytics filters:

- You can apply multiple exclude filters on the same dimension.

- Filter 1: exclude medium = cpc.

- Filter 2: exclude medium = organic.

- However, applying more than one include filter on the same dimension will result in no data all.

So when you would want to include mediums cpc and organic, you would need to use a regular expressions.

Example of filter is shown below:

Back to the exclude filter on “hostname” and/or “source/medium”.

The problem here is that the list of potential bots you want to filter keeps on growing (as long as Google doesn’t provide a robust solution).

You need to continuously monitor your Google Analytics data and several dimensions to keep your exclude list up to date. That’s why I limit myself on using exclude filters on the ISP in this case.

How to Deal with Bot Traffic

Now let’s look into actionable steps to greatly reduce bot and spam traffic in your Google Analytics account.

Modify View Level Setting

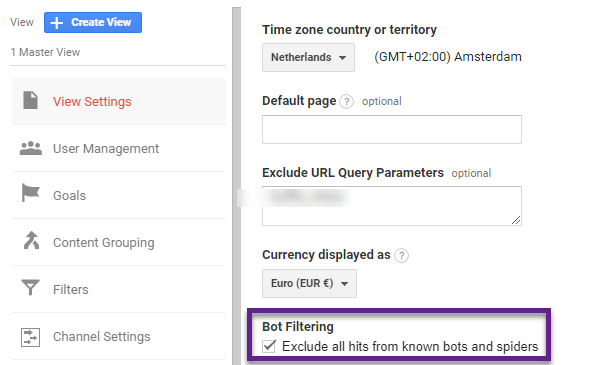

In Google Analytics, you can tick a box at the view level to filter out “known” bots and spiders.

I recommend checking this box in all views except the “Raw Data View”.

Note: you can set up an extra view with this box unchecked if you want to know what data is excluded by checking this box.

Hostname Filter

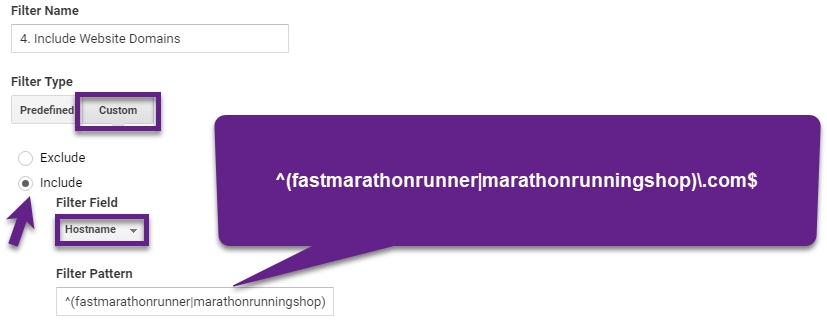

A very useful strategy to reduce the amount of bot and spam traffic is by configuring a view level filter.

As a best practice I recommend setting up a hostname filter on the domains where you have implemented the GA tracking code. Most often this is one domain, but you might have subdomain or crossdomain tracking enabled.

Here is an advanced example:

- First domain: fastmarathonrunner.com.

- Second domain: marathonrunningshop.com.

This will ensure that traffic with hostname “(not set)” or another unknown hostname won’t appear in your Google Analytics views.

Once again, don’t apply this filter (and filters in general) to the “Raw Data View”.

Note: never set up two different “include” hostname filters in this case. It will result in zero traffic in your view(s).

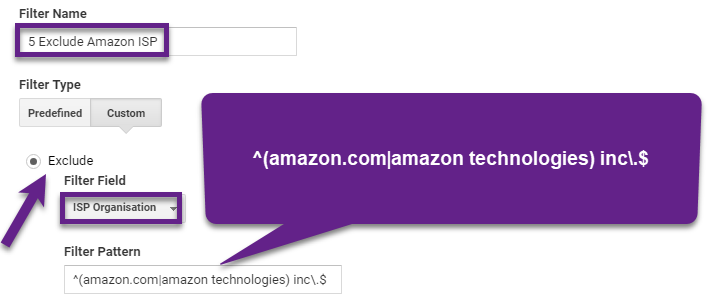

ISP Filter

Early on in this post we discussed how to recognize “bot traffic” in general.

We saw that on the ISP level you can recognize a portion of the “bot traffic” that is sent to Google Analytics.

The two Service Providers that sent a substantial amount of traffic were: amazon.com inc. and amazon technologies inc..

In this case we want to use an “exclude” ISP filter if the hostname for this traffic matches the hostname filter we set up before.

This is what it looks like:

“ISP Organisation” matches “Service Provider”.

Quick tip: you can directly verify the Regular Expression in the corresponding Google Analytics report:

Note: read this in-depth guide on Regular Expressions if you want to learn more about how I create these filters.

Custom Alerts

The three previously mentioned strategies will get you up-to-speed with filtering out bot traffic.

Sometimes you want to monitor suspicious traffic inside or outside of Google Analytics.

Within Google Analytics you can use custom alerts to monitor sudden changes in traffic that might be unreal.

Read this post to learn more about Custom Alerts and how to make them extremely useful.

Measurement Protocol

Are you an advanced GA user? There is one more thing to take into account then.

Some of you might send data to Google Analytics from other sources, e.g. offline data.

This is when the Measurement Protocol comes in scope.

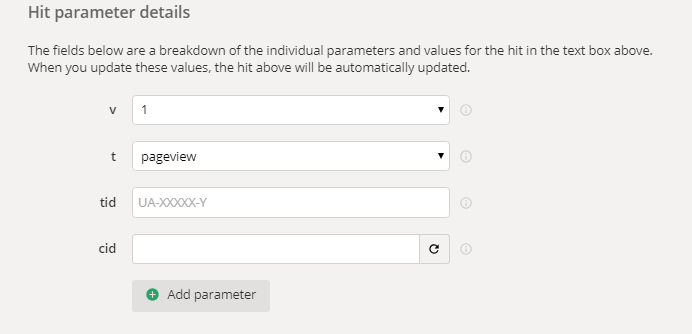

You can – but it is not required – define the Document Host Name when sending other hits to Google Analytics.

Here you can check out the Measurement Protocol Hit Builder if you want to learn more.

The required parameters are:

- Measurement Protocol Version (v).

- Hit Type (t).

- Tracking ID (tid).

- Client ID (cid).

Here comes the issue:

-

- Many companies don’t define the Document Host Name for all hits that are sent (as it is not required).

- This would cause the hostname of these hits show up as (not set).

- The “include” hostname filter that we previously discussed would exclude these hits in this case.

Take this into account when using the Measuring Protocol and Hostname filter at the same time!

Concluding Thoughts

Here are a few last remarks regarding bot and spam filtering in Google Analytics.

- Be aware that not all bot and spam traffic (just a small proportion) will show up in Google Analytics.

- The impact on data reliability is the largest on sites with low traffic numbers.

- There are a few ways to demystify where bot traffic in GA is coming from.

- Not only for filtering bot traffic, but for a lot of other reasons you want to learn more about filters and regular expressions in GA.

- There are three main ways to filter out bots and spam:

- Check the box in your Google Analytics views (not in Raw Data View).

- Set up an include hostname filter.

- Set up an exclude ISP filter.

- Extra: use custom alerts to monitor suspicious traffic sources.

- Be aware that using the Measurement Protocol might conflict with your hostname filter.

This is it from my side! What are your thoughts on bot and spam traffic in Google Analytics? What precautionary measures do you take? Happy to hear you comments!

One last thing... Make sure to get my automated Google Analytics 4 Audit Tool. It contains 30 key health checks on the GA4 Setup.

As usual Paul a great article ! And as you mentioned, behind all of this, it is a way to identify bad trafic and then optimize servers or code (depending on the situation) and then increase performances. Indicator I consider as the most important for conversion and user experience on website.

Thanks again ;)

Thanks for the heads up Nicolas! Yes, it is one thing to identify bad traffic in GA and on your website in general. Solving GA issues is relatively easy compared to solving bot and spam issues in general. Something which is very important for conversions, user experience and security!

I always require my team to use a property ID with a suffix above 10 (UA-XXXXXXX-18) for new client sites, as we have found many bots attack suffices in the 1-3 range, since these sites are low-hanging fruit typically run by inexperienced webmasters/analysts (although there is little we can do about legacy accounts in this range with robust data history).

Interesting comment! But does this mean you set up all new clients in the same GA account? In general, I highly recommend your clients to set up their own account and to give you access after. And to refrain from having multiple clients in own single GA account. Further, in my experience most sites – also from experienced analysts – are set up on -1 or -2. As this is the most logical way to approach the GA setup.

Thanks, Paul. This is great. I have a new client and immediately detected that 95% of their traffic was coming from bots. It was easy to see because it was all in direct traffic and 100% bounced. I managed to filter it out by identifying that they all came from one host name. But I was concerned that all those bounces might still be sending the wrong SEO signals to Google, so I gathered up the list of secondary dimension “Network Domains” and sent those to the host who proceeded to block them at the server level for me. Now the direct traffic in my View that shows all is the same as in my filtered View. So it appears we have successfully blocked the bots before they can even trigger a Pageview in Analytics. I’m happy with the results. Was my extra step necessary for better SEO? Too soon to tell with this client, and I may never know because this is a new client who did not even have Analytics active before me so I don’t have prior data. If SEOs are reading this, I’d love to know what they think. Hopefully Google is smart enough to recognize a bot when it “sees” it and disregards their activity. Is there data to support that? Inquiring minds like mine want to know.

Hi Kathy,

Great to hear you got it resolved. As far as I know, there is no direct impact on SEO. However, blocking at the server level – if you have the knowledge/assistance to do so – is always good to do. As you won’t need filters in that case to accomplish the same. And, the blocking rules are applied at the property level.

Best,

Paul