Google Analytics sampling can ruin your data and insights. In this post you will learn how sampling works and how you can deal with it in the best way.

Understanding at least the basics of sampling is crucial for everybody who is actively involved with Google Analytics.

In general, sampling is not a big issue on smaller websites with less than 50K sessions each month. However, it all depends on the time period that you select.

You have to be more careful when analyzing your data, if you have millions of people visiting your website each month.

You have to be more careful when analyzing your data, if you have millions of people visiting your website each month.

Chances are big that you are running into sampling issues.

The first part of this article deals with sampling in Google Analytics and how it works.

In the second part I discuss eight strategies to solve or at least limit the impact of sampling on your data in Universal Analytics.

Note: read this post if you want to learn about sampling in Google Analytics 4 (GA4).

How Google Analytics Sampling Works

Each Google Analytics account consists of one or more properties and one or more views.

Unfiltered data is stored in a property based on a unique property number.

And then in each reporting view a set of pre-aggregated and unsampled data tables is stored (and processed on a daily basis).

All the basic or standard reports that you see in Google Analytics can be displayed rather quickly because of this pre-aggregated data.

However, sometimes you need to run ad-hoc queries to retrieve custom data.

However, sometimes you need to run ad-hoc queries to retrieve custom data.

Examples of ad-hoc queries include:

- Applying segments to default reports.

- Applying secondary dimensions to default reports.

- Creating custom reports.

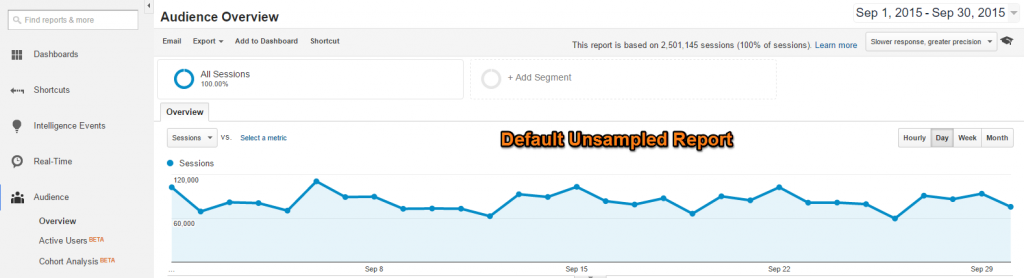

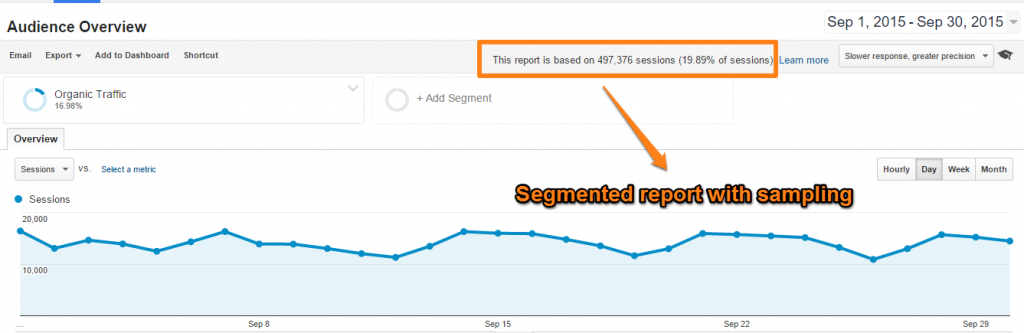

Let’s take a look at the report below (segment applied on organic traffic):

Less than 20% of the overall sessions are used to generate this report.

Less than 20% of the overall sessions are used to generate this report.

So make sure to watch for any message containing This report is based on N sessions. That’s a signal that the resulting report is sampled.

The sampling level or threshold in Google Analytics for ad-hoc reports is 500k sessions (and 25M for Premium).

Is Sampling Really an Issue?

It depends. Reports that are created around 90+% of your data are quite reliable in my experience.

However, if the percentage of sessions involved drops far below 75%, the risk of taking big decisions on inaccurate data is a lot higher.

To make it simple (it’s not completely true statistically):

- 25% of sessions in sample -> 25% confidence in data being correct.

- 50% of sessions in sample -> 50% confidence in data being correct.

- 75% of sessions in sample -> 75% confidence in data being correct.

Just do the maths and ask yourself how high this percentage needs to be to feel confortable with making decisions.

And it probably differs according to the situation.

Would you need a higher percentage if it’s a millions decision compared to just making a trend graph for your colleague? I think so!

Read this support article by Google if you want to know all the details around how sampling works.

Eight Solutions for Google Analytics Sampling

There are many different ways to approach and deal with sampling.

In the following chapters I describe eight methods you could use.

Some are easy to implement and others require some extra coding.

1. Adjust Your Data Range

Let’s assume you are looking at a one year period and notice a 20% sampled report.

What you could do is simply reduce the time span to a two months period to get rid of sampling issues.

And then use the Google Analytics API to automate the data export for two months period data ranges. Or aggregate it in a spreadsheet by yourself.

Remember that to avoid sampling you need to have less than 500k sessions within the associated property.

2. Use Standard Reports

Google Analytics counts dozens of useful standard reports. These reports can show millions of sessions without sampling problems.

They are pre-aggregated on a daily basis and never sampled.

I don’t say that limiting yourself to only using standard reports is the perfect solution, but it works!

3. Create New Views with Filters

One of the reasons that I create many different views per property, is to overcome Google Analytics sampling issues.

Let’s asumme you are a big spender in paid search.

Instead of applying a paid search segment to the overall data, you could create a separate view with a filter on paid search.

Outcome:

- The segmented standard report shows sampled data.

- The standard report in a new view with filter on paid search would not have any sampling issues.

I recommend to consider creating new views for traffic segments that are very useful to analyze and optimize on a regular basis.

Read this ultimate guide to Google Analytics filters to further educate yourself on this topic.

4. Reduce the Amount of Traffic per Property

The collection of data of multiple sites in one property might lead to sampling as well.

Here is an example:

- You are the owner of a large soccer brand with 20 different websites.

- Each website records 50k sessions in Google Analytics each month (in same property).

- This makes 1M sessions in total.

Instead of recording all sessions in one property, why not using separate properties for each of your websites?

Of course, it all depends on the structure and how they relate to each other.

However, if you can break it down, you won’t have any sampling issues for a 10 month time period. Not bad I think!

5. Sample Your Data by Modifying Tracking Code

Another option is to modify the GA tracking code.

By sampling the hits for your site or app, you will get reliable report results while staying within the hit limits for your account.

Data collection sampling occurs consistently across users. Therefore, once a user has been selected for data collection, all sessions for the user will send data to GA. This includes future sessions as well.

Here are four useful links to help you set up sample rates:

- Univeral Analytics – website tracking.

- Classic Analytics – website tracking.

- Android apps – mobile app tracking.

- iOS apps – mobile app tracking.

6. Use Google Analytics API

The Google Analytics API is a neat solution in dealing with sampling in Google Analytics.

At maximum you can run 50.000 requests per project per day. This can be increased.

Especially if you run a highly trafficked website, you might want to consider the API for sampling issues.

For example, you could run one or more calls on a daily basis on your desired set of metrics and dimensions. And further aggregate it in Google Sheets.

Take into account that user related metrics might be biased if you use this method.

Review this page for all the configuration and reporting API limits in Google Analytics.

I recommend to watch this video about “Best Practices with Google Analytics API” as well:

7. Use Google Analytics Premium or Adobe Analytics

Withing the tooling atmosphere, both Google Analytics Premium and Adobe Analytics are great tools to effectively deal with sampling.

Sampling in Google Analytics Premium occurs for date ranges that exceed more than 25M sessions (compared to 500k in the normal version).

For Google Analytics Premium, sampling occurs at the view level (instead of property level).

Keep in mind that if you are working on a smaller website, these solutions are probably not in scope.

Note: I am not affiliated with one of these tools.

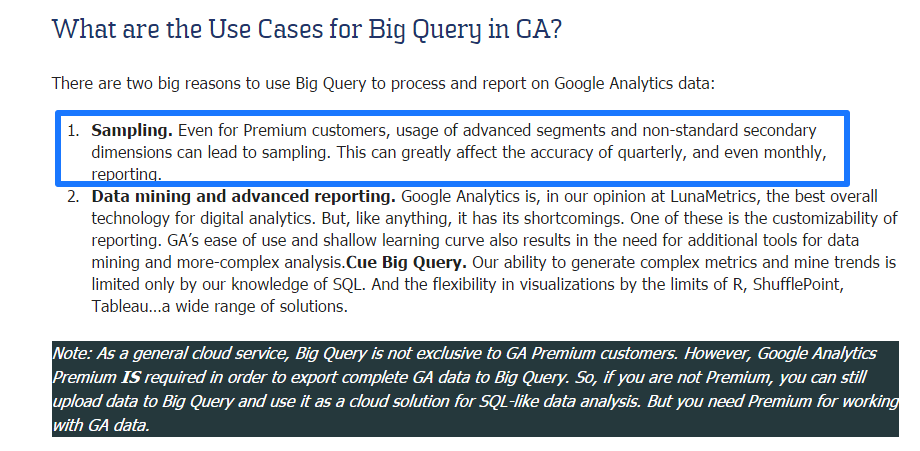

8. Use Big Query

The last solution I wanted to touch upon is Big Query for GA.

LunaMetrics has written quite a few articles around Big Query and this one I recommend to read if you want to learn more.

If you have time, this is a useful 10-minutes video to watch as well:

Do you have to deal with sampling in Google Analytics? What methods do you use to keep your data as accurate as possible?

One last thing... Make sure to get my automated Google Analytics 4 Audit Tool. It contains 30 key health checks on the GA4 Setup.

Thanks for the advice! I think the best way to guarantee you are not dealing with sampling again and again, is to get data out of Google Analytics using API and store it in Google Cloud or in a database.

I wouldn’t use Google Sheets with Google Analytics API, because the amount of data there is pretty limited – the max number of cells 400 000, and the total number of cells that contain formulas can’t be more than 40 000.

Messing with Google Analytics API on your own is pretty tricky too. Once GA releases a new version (API v4 is here), all your dashboards and code breaks, and you have to start from almost scratch.

I use tools that have connectors to API and deal with everything for me like Analytics Canvas.

Hi Olga, thank you for your comment! You are right about needing a (probably) paid tool like Analytics Canvas if you aim for a robust solution.

I assume you query your data per month (or even week) automatically to avoid sampling and it get’s consolidated after. Have you found this method to skew any other metrics in GA?

Best,

Paul

I think sampling happens on the property level, not the view level. So splitting in different views will not help. https://support.google.com/analytics/answer/2637192?hl=en

Hi Béate,

It’s true that sampling is applied at the property level. However, it still can be effective to use different views. I will show you an example.

Let’s assume you have a website with 2 million sessions in a month and 400.000 come from paid search.

1) A view with no filters applied. If you would segment on paid search, you would run into sampling if you select one month of data.

2) A view with a filter on paid search. This view collects 400.000 sessions each month. As long as you use the overall reports (no secondary dimension, custom report or segment applied) you will see accurate report data for paid search. So creating multiple views can be very effective to analyze particular segments in more depth without having a sampling risk.

Hope this helps!

Paul

While these are good ways to deal with sampling, they are just that, way to deal. It shows that there isn’t a good solution overall, outside of paying (analytics 360/adobe analytics).

I love the thought around how if you are only getting 25% of your data in a report, you could look at that as having 25% confidence. That can make a compelling argument to management if you are trying to upgrade to a paid solution, or get further funding.

Hi Chris,

You are right here, no 100% solution. Unless you can afford to change to using paid tools.

The 25% sessions in a sample -> 25% confidence, is a personal, soft approach to this issue. However, it also greatly depends of the actual size of your sample/population. For me it’s always a “no go” if there are less than 70/80% of the sessions in the sample. And it also depends on what kind of decision you will base on your data. The higher the risk, the higher the confidence needed.

I have actually just searched on Google and found a great article written by LunaMetrics that goes a lot deeper on this:

http://www.lunametrics.com/blog/2016/03/03/accurate-sampling-google-analytics/

Best,

Paul

Totally agree with you on data sampling. One of my clients GA account always run into this issue since monthly sessions exceed free GA limits.

However I have a small work around for this. Instead of using GA’s pre-built segments, you can make use of the analytics tools from the left menu.

For an example, say if you are after the organic UV. Instead of selecting this pre-built segment that gives you sampling data, you can get the non-sampling data by going to Acquisition > All traffic > Source/medium and then search for “organic”.

Thanks Peter, that definitely works in some cases. However, you can’t replicate it via the regular interface / navigation paths if you work with more advanced segments in GA.